Introduction

Kubernetes Container Storage Interface (CSI) Documentation

This site documents how to develop, deploy, and test a Container Storage Interface (CSI) driver on Kubernetes.

The Container Storage Interface (CSI) is a standard for exposing arbitrary block and file storage systems to containerized workloads on Container Orchestration Systems (COs) like Kubernetes. Using CSI third-party storage providers can write and deploy plugins exposing new storage systems in Kubernetes without ever having to touch the core Kubernetes code.

The target audience for this site is third-party developers interested in developing CSI drivers for Kubernetes.

Kubernetes users interested in how to deploy or manage an existing CSI driver on Kubernetes should look at the documentation provided by the author of the CSI driver.

Kubernetes users interested in how to use a CSI driver should look at kubernetes.io documentation.

Kubernetes Releases

| Kubernetes | CSI Spec Compatibility | Status |

|---|---|---|

| v1.9 | v0.1.0 | Alpha |

| v1.10 | v0.2.0 | Beta |

| v1.11 | v0.3.0 | Beta |

| v1.13 | v0.3.0, v1.0.0 | GA |

Development and Deployment

Minimum Requirements (for Developing and Deploying a CSI driver for Kubernetes)

Kubernetes is as minimally prescriptive about packaging and deployment of a CSI Volume Driver as possible.

The only requirements are around how Kubernetes (master and node) components find and communicate with a CSI driver.

Specifically, the following is dictated by Kubernetes regarding CSI:

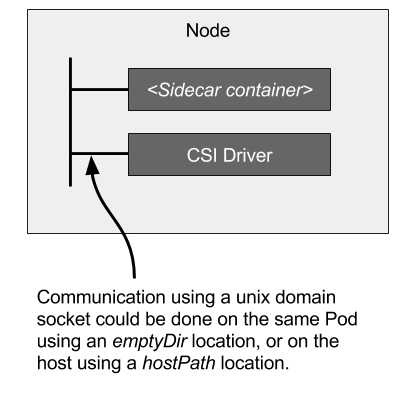

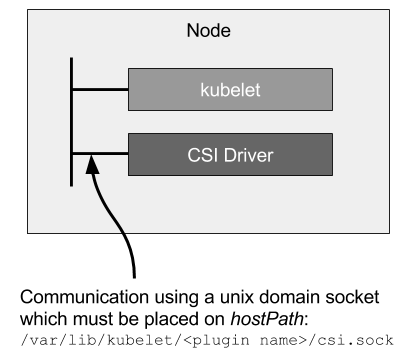

- Kubelet to CSI Driver Communication

- Kubelet directly issues CSI calls (like

NodeStageVolume,NodePublishVolume, etc.) to CSI drivers via a Unix Domain Socket to mount and unmount volumes. - Kubelet discovers CSI drivers (and the Unix Domain Socket to use to interact with a CSI driver) via the kubelet plugin registration mechanism.

- Therefore, all CSI drivers deployed on Kubernetes MUST register themselves using the kubelet plugin registration mechanism on each supported node.

- Kubelet directly issues CSI calls (like

- Master to CSI Driver Communication

- Kubernetes master components do not communicate directly (via a Unix Domain Socket or otherwise) with CSI drivers.

- Kubernetes master components interact only with the Kubernetes API.

- Therefore, CSI drivers that require operations that depend on the Kubernetes API (like volume create, volume attach, volume snapshot, etc.) MUST watch the Kubernetes API and trigger the appropriate CSI operations against it.

Because these requirements are minimally prescriptive, CSI driver developers are free to implement and deploy their drivers as they see fit.

That said, to ease development and deployment, the mechanism described below is recommended.

Recommended Mechanism (for Developing and Deploying a CSI driver for Kubernetes)

The Kubernetes development team has established a "Recommended Mechanism" for developing, deploying, and testing CSI Drivers on Kubernetes. It aims to reduce boilerplate code and simplify the overall process for CSI Driver developers.

This "Recommended Mechanism" makes use of the following components:

- Kubernetes CSI Sidecar Containers

- Kubernetes CSI objects

- CSI Driver Testing tools

To implement a CSI driver using this mechanism, a CSI driver developer should:

- Create a containerized application implementing the Identity, Node, and optionally the Controller services described in the CSI specification (the CSI driver container).

- See Developing CSI Driver for more information.

- Unit test it using csi-sanity.

- See Driver - Unit Testing for more information.

- Define Kubernetes API YAML files that deploy the CSI driver container along with appropriate sidecar containers.

- See Deploying in Kubernetes for more information.

- Deploy the driver on a Kubernetes cluster and run end-to-end functional tests on it.

Reference Links

Developing CSI Driver for Kubernetes

Remain Informed

All developers of CSI drivers should join https://groups.google.com/forum/#!forum/container-storage-interface-drivers-announce to remain informed about changes to CSI or Kubernetes that may affect existing CSI drivers.

Overview

The first step to creating a CSI driver is writing an application implementing the gRPC services described in the CSI specification

At a minimum, CSI drivers must implement the following CSI services:

- CSI

Identityservice- Enables callers (Kubernetes components and CSI sidecar containers) to identify the driver and what optional functionality it supports.

- CSI

Nodeservice- Only

NodePublishVolume,NodeUnpublishVolume, andNodeGetCapabilitiesare required. - Required methods enable callers to make a volume available at a specified path and discover what optional functionality the driver supports.

- Only

All CSI services may be implemented in the same CSI driver application. The CSI driver application should be containerized to make it easy to deploy on Kubernetes. Once containerized, the CSI driver can be paired with CSI Sidecar Containers and deployed in node and/or controller mode as appropriate.

Capabilities

If your driver supports additional features, CSI "capabilities" can be used to advertise the optional methods/services it supports, for example:

CONTROLLER_SERVICE(PluginCapability)- The entire CSI

Controllerservice is optional. This capability indicates the driver implement one or more of the methods in the CSIControllerservice.

- The entire CSI

VOLUME_ACCESSIBILITY_CONSTRAINTS(PluginCapability)- This capability indicates the volumes for this driver may not be equally accessible from all nodes in the cluster, and that the driver will return additional topology related information that Kubernetes can use to schedule workloads more intelligently or influence where a volume will be provisioned.

VolumeExpansion(PluginCapability)- This capability indicates the driver supports resizing (expanding) volumes after creation.

CREATE_DELETE_VOLUME(ControllerServiceCapability)- This capability indicates the driver supports dynamic volume provisioning and deleting.

PUBLISH_UNPUBLISH_VOLUME(ControllerServiceCapability)- This capability indicates the driver implements

ControllerPublishVolumeandControllerUnpublishVolume-- operations that correspond to the Kubernetes volume attach/detach operations. This may, for example, result in a "volume attach" operation against the Google Cloud control plane to attach the specified volume to the specified node for the Google Cloud PD CSI Driver.

- This capability indicates the driver implements

CREATE_DELETE_SNAPSHOT(ControllerServiceCapability)- This capability indicates the driver supports provisioning volume snapshots and the ability to provision new volumes using those snapshots.

CLONE_VOLUME(ControllerServiceCapability)- This capability indicates the driver supports cloning of volumes.

STAGE_UNSTAGE_VOLUME(NodeServiceCapability)- This capability indicates the driver implements

NodeStageVolumeandNodeUnstageVolume-- operations that correspond to the Kubernetes volume device mount/unmount operations. This may, for example, be used to create a global (per node) volume mount of a block storage device.

- This capability indicates the driver implements

This is an partial list, please see the CSI spec for a complete list of capabilities. Also see the Features section to understand how a feature integrates with Kubernetes.

Versioning, Support, and Kubernetes Compatibility

Versioning

Each Kubernetes CSI component version is expressed as x.y.z, where x is the major version, y is the minor version, and z is the patch version, following Semantic Versioning.

Patch version releases only contain bug fixes that do not break any backwards compatibility.

Minor version releases may contain new functionality that do not break any backwards compatibility (except for alpha features).

Major version releases may contain new functionality or fixes that may break backwards compatibility with previous major releases. Changes that require a major version increase include: removing or changing API, flags, or behavior, new RBAC requirements that are not opt-in, new Kubernetes minimum version requirements.

A litmus test for not breaking compatibility is to replace the image of a component in an existing deployment without changing that deployment in any other way.

To minimize the number of branches we need to support, we do not have a general policy for releasing new minor versions on older majors. We will make exceptions for work related to meeting production readiness requirements. Only the previous major version will be eligible for these exceptions, so long as the time between the previous major version and the current major version is under six months. For example, if "X.0.0" and "X+1.0.0" were released under six months apart, "X.0.0" would be eligible for new minor releases.

Support

The Kubernetes CSI project follows the broader Kubernetes project on support. Every minor release branch will be supported with patch releases on an as-needed basis for at least 1 year, starting with the first release of that minor version. In addition, the minor release branch will be supported for at least 3 months after the next minor version is released, to allow time to integrate with the latest release.

Alpha Features

Alpha features are subject to break or be removed across Kubernetes and CSI component releases. There is no guarantee alpha features will continue to function if upgrading the Kubernetes cluster or upgrading a CSI sidecar or controller.

Kubernetes Compatibility

Each release of a CSI component has a minimum, maximum and recommended Kubernetes version that it is compatible with.

Minimum Version

Minimum version specifies the lowest Kubernetes version where the component will function with the most basic functionality, and no additional features added later. Generally, this aligns with the Kubernetes version where that CSI spec version was added.

Maximum Version

The maximum Kubernetes version specifies the last working Kubernetes version for all beta and GA features that the component supports. This generally aligns with one Kubernetes release before support for the CSI spec version was removed or if a particular Kubernetes API or feature was removed.

Recommended Version

Note that any new features added may have dependencies on Kubernetes versions greater than the minimum Kubernetes version. The recommended Kubernetes version specifies the lowest Kubernetes version needed where all its supported features will function correctly. Trying to use a new sidecar feature on a Kubernetes cluster below the recommended Kubernetes version may fail to function correctly. For that reason, it is encouraged to stay as close to the recommended Kubernetes version as possible.

For more details on which features are supported with which Kubernetes versions and their corresponding CSI components, please see each feature's individual page.

Kubernetes Changelog

This page summarizes major CSI changes made in each Kubernetes release. For details on individual features, visit the Features section.

Kubernetes 1.28

Features

- Removals:

- Deprecations:

Kubernetes 1.27

Features

- Beta

- Alpha

Kubernetes 1.26

Features

- GA

- Delegate fsgroup to CSI driver

- Azure File CSI migration

- vSphere CSI migration

- Alpha

- Cross namespace volume provisioning

Kubernetes 1.25

Features

- GA

- CSI ephemeral inline volumes

- Core CSI migration

- AWS EBS CSI migration

- GCE PD CSI migration

- Beta

- vSphere CSI Migration (on by default)

- Portworx CSI Migration (off-by-default)

- Alpha

Deprecation

- In-tree plugin removal:

- AWS EBS

- Azure Disk

Kubernetes 1.24

Features

- GA

- Volume expansion

- Storage capacity tracking

- Azure Disk CSI Migration

- OpenStack Cinder CSI Migration

- Beta

- Volume populator

- Alpha

- SELinux relabeling with mount options

- Prevent volume mode conversion

Kubernetes 1.23

Features

- GA

- CSI fsgroup policy

- Non-recusrive fsgroup ownership

- Generic ephemeral volumes

- Beta

- Delegate fsgroup to CSI driver

- Azure Disk CSI Migration (on-by-default)

- AWS EBS CSI Migration (on-by-default)

- GCE PD CSI Migration (on-by-default)

- Alpha

- Recover from Expansion Failure

- Honor PV Reclaim Policy

- RBD CSI Migration

- Portworx CSI migration

Kubernetes 1.22

Features

- GA

- Windows CSI (CSI-Proxy API v1)

- Pod token requests (CSIServiceAccountToken)

- Alpha

- ReadWriteOncePod access mode

- Delegate fsgroup to CSI driver

- Generic data populators

Kubernetes 1.21

Features

- Beta

- Pod token requests (CSIServiceAccountToken)

- Storage capacity tracking

- Generic ephemeral volumes

Kubernetes 1.20

Breaking Changes

- Kubelet no longer creates the target_path for NodePublishVolume in accordance with the CSI spec. Kubelet also no longer checks if staging and target paths are mounts or corrupted. CSI drivers need to be idempotent and do any necessary mount verification.

Features

- GA

- Volume snapshots and restore

- Beta

- CSI fsgroup policy

- Non-recusrive fsgroup ownership

- Alpha

- Pod token requests (CSIServiceAccountToken)

Kubernetes 1.19

Deprecations

- Behaviour of NodeExpandVolume being called between NodeStage and NodePublish is deprecated for CSI volumes. CSI drivers should support calling NodeExpandVolume after NodePublish if they have node EXPAND_VOLUME capability

Features

- Beta

- CSI on Windows

- CSI migration for AzureDisk and vSphere drivers

- Alpha

- CSI fsgroup policy

- Generic ephemeral volumes

- Storage capacity tracking

- Volume health monitoring

Kubernetes 1.18

Deprecations

storage.k8s.io/v1beta1CSIDriverobject has been deprecated and will be removed in a future release.- In a future release, kubelet will no longer create the CSI NodePublishVolume target directory, in accordance with the CSI specification. CSI drivers may need to be updated accordingly to properly create and process the target path.

Features

- GA

- Raw block volumes

- Volume cloning

- Skip attach

- Pod info on mount

- Beta

- CSI migration for Openstack cinder driver.

- Alpha

- CSI on Windows

storage.k8s.io/v1CSIDriverobject introduced.

Kubernetes 1.17

Breaking Changes

- CSI 0.3 support has been removed. CSI 0.3 drivers will no longer function.

Deprecations

storage.k8s.io/v1beta1CSINodeobject has been deprecated and will be removed in a future release.

Features

- GA

- Volume topology

- Volume limits

- Beta

- Volume snapshots and restore

- CSI migration for AWS EBS and GCE PD drivers

storage.k8s.io/v1CSINodeobject introduced.

Kubernetes 1.16

Features

- Beta

- Volume cloning

- Volume expansion

- Ephemeral local volumes

Kubernetes 1.15

Features

- Volume capacity usage metrics

- Alpha

- Volume cloning

- Ephemeral local volumes

- Resizing secrets

Kubernetes 1.14

Breaking Changes

csi.storage.k8s.io/v1alpha1CSINodeInfoandCSIDriverCRDs are no longer supported.

Features

- Beta

- Topology

- Raw block

- Skip attach

- Pod info on mount

- Alpha

- Volume expansion

storage.k8s.io/v1beta1CSINodeandCSIDriverobjects introduced.

Kubernetes 1.13

Deprecations

- CSI spec 0.2 and 0.3 are deprecated and support will be removed in Kubernetes 1.17.

Features

- GA support added for CSI spec 1.0.

Kubernetes 1.12

Breaking Changes

Kubelet device plugin registration is enabled by default, which requires CSI

plugins to use driver-registrar:v0.3.0 to register with kubelet.

Features

- Alpha

- Snapshots

- Topology

- Skip attach

- Pod info on mount

csi.storage.k8s.io/v1alpha1CSINodeInfoandCSIDriverCRDs were introduced and have to be installed before deploying a CSI driver.

Kubernetes 1.11

Features

- Beta support added for CSI spec 0.3.

- Alpha

- Raw block

Kubernetes 1.10

Breaking Changes

- CSI spec 0.1 is no longer supported.

Features

- Beta support added for CSI spec 0.2.

This added optional

NodeStageVolumeandNodeUnstageVolumecalls which map to KubernetesMountDeviceandUnmountDeviceoperations.

Kubernetes 1.9

Features

- Alpha support added for CSI spec 0.1.

Kubernetes Cluster Controllers

The Kubernetes cluster controllers are responsible for managing snapshot objects and operations across multiple CSI drivers, so they should be bundled and deployed by the Kubernetes distributors as part of their Kubernetes cluster management process (independent of any CSI Driver).

The Kubernetes development team maintains the following Kubernetes cluster controllers:

Snapshot Controller

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-snapshotter

Status: GA v4.0.0+

When Volume Snapshot is promoted to Beta in Kubernetes 1.17, the CSI external-snapshotter sidecar controller is split into two controllers: a snapshot-controller and a CSI external-snapshotter sidecar. See the following table for snapshot-controller release information.

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v8.2.0 | release-8.2 | v1.11.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v8.2.0 | v1.25 | - | v1.25 |

| external-snapshotter v8.1.0 | release-8.1 | v1.11.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v8.1.0 | v1.25 | - | v1.25 |

| external-snapshotter v8.0.2 | release-8.0 | v1.9.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v8.0.2 | v1.25 | - | v1.25 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v6.3.0 | release-6.2 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v6.2.1 | v1.20 | - | v1.24 |

| external-snapshotter v6.2.2 | release-6.2 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v6.2.1 | v1.20 | - | v1.24 |

| external-snapshotter v6.1.0 | release-6.1 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v6.1.0 | v1.20 | - | v1.24 |

| external-snapshotter v6.0.1 | release-6.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v6.0.1 | v1.20 | - | v1.24 |

| external-snapshotter v5.0.1 | release-5.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v5.0.1 | v1.20 | - | v1.22 |

| external-snapshotter v4.2.1 | release-4.2 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v4.2.1 | v1.20 | - | v1.22 |

| external-snapshotter v4.1.1 | release-4.1 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v4.1.1 | v1.20 | - | v1.20 |

| external-snapshotter v4.0.1 | release-4.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v4.0.1 | v1.20 | - | v1.20 |

| external-snapshotter v3.0.3 (beta) | release-3.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v3.0.3 | v1.17 | - | v1.17 |

| external-snapshotter v2.1.4 (beta) | release-2.1 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v2.1.4 | v1.17 | - | v1.17 |

For more information on the CSI external-snapshotter sidecar, see this external-snapshotter page.

Description

The snapshot controller will be watching the Kubernetes API server for VolumeSnapshot and VolumeSnapshotContent CRD objects. The CSI external-snapshotter sidecar only watches the Kubernetes API server for VolumeSnapshotContent CRD objects. The snapshot controller will be creating the VolumeSnapshotContent CRD object which triggers the CSI external-snapshotter sidecar to create a snapshot on the storage system.

The snapshot controller will be watching for VolumeGroupSnapshot and VolumeGroupSnapshotContent CRD objects when Volume Group Snapshot support is enabled via the --enable-volume-group-snapshots option.

For detailed snapshot beta design changes, see the design doc here.

For detailed information about volume snapshot and restore functionality, see Volume Snapshot & Restore.

For detailed information about volume group snapshot and restore functionality, see Volume Snapshot & Restore.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-snapshotter/blob/release-6.2/README.md.

Deployment

Kubernetes distributors should bundle and deploy the controller and CRDs as part of their Kubernetes cluster management process (independent of any CSI Driver).

If your cluster does not come pre-installed with the correct components, you may manually install these components by executing the following steps.

git clone https://github.com/kubernetes-csi/external-snapshotter/

cd ./external-snapshotter

git checkout release-6.2

kubectl kustomize client/config/crd | kubectl create -f -

kubectl -n kube-system kustomize deploy/kubernetes/snapshot-controller | kubectl create -f -

Snapshot Validation Webhook

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-snapshotter

Status: GA as of 4.0.0

There is a new validating webhook server which provides tightened validation on snapshot objects. This SHOULD be installed by the Kubernetes distros along with the snapshot-controller, not end users. It SHOULD be installed in all Kubernetes clusters that has the snapshot feature enabled.

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v8.1.0 | release-8.1 | v1.11.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v8.1.0 | v1.25 | - | v1.25 |

| external-snapshotter v8.0.2 | release-8.0 | v1.9.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v8.0.2 | v1.25 | - | v1.25 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v6.3.0 | release-6.2 | v1.0.0 | - | registry.k8s.io/sig-storage/napshot-validation-webhook:v6.2.1 | v1.20 | - | v1.24 |

| external-snapshotter v6.2.2 | release-6.2 | v1.0.0 | - | registry.k8s.io/sig-storage/napshot-validation-webhook:v6.2.1 | v1.20 | - | v1.24 |

| external-snapshotter v6.1.0 | release-6.1 | v1.0.0 | - | registry.k8s.io/sig-storage/napshot-validation-webhookr:v6.1.0 | v1.20 | - | v1.24 |

| snapshot-validation-webhook v6.0.1 | release-6.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v6.0.1 | v1.20 | - | v1.24 |

| snapshot-validation-webhook v5.0.1 | release-5.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v5.0.1 | v1.20 | - | v1.22 |

| snapshot-validation-webhook v4.2.1 | release-4.2 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v4.2.1 | v1.20 | - | v1.22 |

| snapshot-validation-webhook v4.1.1 | release-4.1 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v4.1.0 | v1.20 | - | v1.20 |

| snapshot-validation-webhook v4.0.1 | release-4.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v4.0.1 | v1.20 | - | v1.20 |

| snapshot-validation-webhook v3.0.3 | release-3.0 | v1.0.0 | - | registry.k8s.io/sig-storage/snapshot-validation-webhook:v3.0.3 | v1.17 | - | v1.17 |

Description

The snapshot validating webhook is an HTTP callback which responds to admission requests. It is part of a larger plan to tighten validation for volume snapshot objects. This webhook introduces the ratcheting validation mechanism targeting the tighter validation. The cluster admin or Kubernetes distribution admin should install the webhook alongside the snapshot controllers and CRDs.

:warning: WARNING: Cluster admins choosing not to install the webhook server and participate in the phased release process can cause future problems when upgrading from

v1beta1tov1volumesnapshot API, if there are currently persisted objects which fail the new stricter validation. Potential impacts include being unable to delete invalid snapshot objects.

Deployment

Kubernetes distributors should bundle and deploy the snapshot validation webhook along with the snapshot controller and CRDs as part of their Kubernetes cluster management process (independent of any CSI Driver).

Read more about how to install the example webhook here.

CSI Proxy

Status and Releases

Git Repository: https://github.com/kubernetes-csi/csi-proxy

Status: V1 starting with v1.0.0

| Status | Min K8s Version | Max K8s Version |

|---|---|---|

| v0.1.0 | 1.18 | - |

| v0.2.0+ | 1.18 | - |

| v1.0.0+ | 1.18 | - |

Description

CSI Proxy is a binary that exposes a set of gRPC APIs around storage operations over named pipes in Windows. A container, such as CSI node plugins, can mount the named pipes depending on operations it wants to exercise on the host and invoke the APIs.

Each named pipe will support a specific version of an API (e.g. v1alpha1, v2beta1) that targets a specific area of storage (e.g. disk, volume, file, SMB, iSCSI). For example, \\.\pipe\csi-proxy-filesystem-v1alpha1, \\.\pipe\csi-proxy-disk-v1beta1. Any release of csi-proxy.exe binary will strive to maintain backward compatibility across as many prior stable versions of an API group as possible. Please see details in this CSI Windows support KEP

Usage

Run csi-proxy.exe binary directly on a Windows node. The command line options are:

-

-kubelet-path: This is the prefix path of the kubelet directory in the host file system (the default value is set toC:\var\lib\kubelet) -

-windows-service: Configure as a Windows Service -

-log_file: If non-empty, use this log file. (Note: must setlogtostdrr=false if setting -log_file)

Note that -kubelet-pod-path and -kubelet-csi-plugins-path were used in prior 1.0.0 versions, and they are now replaced by new parameter -kubelet-path

For detailed information (binary parameters, etc.), see the README of the relevant branch.

Deployment

It the responsibility of the Kubernetes distribution or cluster admin to install csi-proxy. Directly run csi-proxy.exe binary or run it as a Windows Service on Kubernetes nodes. For example,

$flags = "-windows-service -log_file=\etc\kubernetes\logs\csi-proxy.log -logtostderr=false"

sc.exe create csiproxy binPath= "${env:NODE_DIR}\csi-proxy.exe $flags"

sc.exe failure csiproxy reset= 0 actions= restart/10000

sc.exe start csiproxy

Kubernetes CSI Sidecar Containers

Kubernetes CSI Sidecar Containers are a set of standard containers that aim to simplify the development and deployment of CSI Drivers on Kubernetes.

These containers contain common logic to watch the Kubernetes API, trigger appropriate operations against the “CSI volume driver” container, and update the Kubernetes API as appropriate.

The containers are intended to be bundled with third-party CSI driver containers and deployed together as pods.

The containers are developed and maintained by the Kubernetes Storage community.

Use of the containers is strictly optional, but highly recommended.

Benefits of these sidecar containers include:

- Reduction of "boilerplate" code.

- CSI Driver developers do not have to worry about complicated, "Kubernetes specific" code.

- Separation of concerns.

- Code that interacts with the Kubernetes API is isolated from (and in a different container than) the code that implements the CSI interface.

The Kubernetes development team maintains the following Kubernetes CSI Sidecar Containers:

- external-provisioner

- external-attacher

- external-snapshotter

- external-resizer

- node-driver-registrar

- cluster-driver-registrar (deprecated)

- livenessprobe

CSI external-attacher

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-attacher

Status: GA/Stable

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-attacher v4.8.0 | release-4.8 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.8.0 | v1.17 | - | v1.29 |

| external-attacher v4.7.0 | release-4.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.7.0 | v1.17 | - | v1.29 |

| external-attacher v4.6.1 | release-4.6 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.6.1 | v1.17 | - | v1.29 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-attacher v4.4.0 | release-4.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.4.0 | v1.17 | - | v1.27 |

| external-attacher v4.3.0 | release-4.3 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.3.0 | v1.17 | - | v1.22 |

| external-attacher v4.2.0 | release-4.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.2.0 | v1.17 | - | v1.22 |

| external-attacher v4.1.0 | release-4.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.1.0 | v1.17 | - | v1.22 |

| external-attacher v4.0.0 | release-4.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v4.0.0 | v1.17 | - | v1.22 |

| external-attacher v3.5.1 | release-3.5 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.5.1 | v1.17 | - | v1.22 |

| external-attacher v3.4.0 | release-3.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.4.0 | v1.17 | - | v1.22 |

| external-attacher v3.3.0 | release-3.3 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.3.0 | v1.17 | - | v1.22 |

| external-attacher v3.2.1 | release-3.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.2.1 | v1.17 | - | v1.17 |

| external-attacher v3.1.0 | release-3.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.1.0 | v1.17 | - | v1.17 |

| external-attacher v3.0.2 | release-3.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-attacher:v3.0.2 | v1.17 | - | v1.17 |

| external-attacher v2.2.0 | release-2.2 | v1.0.0 | - | quay.io/k8scsi/csi-attacher:v2.2.0 | v1.14 | - | v1.17 |

| external-attacher v2.1.0 | release-2.1 | v1.0.0 | - | quay.io/k8scsi/csi-attacher:v2.1.0 | v1.14 | - | v1.17 |

| external-attacher v2.0.0 | release-2.0 | v1.0.0 | - | quay.io/k8scsi/csi-attacher:v2.0.0 | v1.14 | - | v1.15 |

| external-attacher v1.2.1 | release-1.2 | v1.0.0 | - | quay.io/k8scsi/csi-attacher:v1.2.1 | v1.13 | - | v1.15 |

| external-attacher v1.1.1 | release-1.1 | v1.0.0 | - | quay.io/k8scsi/csi-attacher:v1.1.1 | v1.13 | - | v1.14 |

| external-attacher v0.4.2 | release-0.4 | v0.3.0 | v0.3.0 | quay.io/k8scsi/csi-attacher:v0.4.2 | v1.10 | v1.16 | v1.10 |

Description

The CSI external-attacher is a sidecar container that watches the Kubernetes API server for VolumeAttachment objects and triggers Controller[Publish|Unpublish]Volume operations against a CSI endpoint.

Usage

CSI drivers that require integrating with the Kubernetes volume attach/detach hooks should use this sidecar container, and advertise the CSI PUBLISH_UNPUBLISH_VOLUME controller capability.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-attacher/blob/master/README.md.

Deployment

The CSI external-attacher is deployed as a controller. See deployment section for more details.

CSI external-provisioner

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-provisioner

Status: GA/Stable

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-provisioner v5.2.0 | release-5.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v5.2.0 | v1.20 | - | v1.29, v1.31 for beta features |

| external-provisioner v5.1.0 | release-3.5 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v5.1.0 | v1.20 | - | v1.29, v1.31 for beta features |

| external-provisioner v5.0.2 | release-3.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v5.0.2 | v1.20 | - | v1.27 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-provisioner v3.6.0 | release-3.6 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.6.0 | v1.20 | - | v1.27 |

| external-provisioner v3.5.0 | release-3.5 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.5.0 | v1.20 | - | v1.26 |

| external-provisioner v3.4.1 | release-3.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.4.1 | v1.20 | - | v1.26 |

| external-provisioner v3.3.1 | release-3.3 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.3.1 | v1.20 | - | v1.25 |

| external-provisioner v3.2.2 | release-3.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.2.2 | v1.20 | - | v1.22 |

| external-provisioner v3.1.1 | release-3.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.1.1 | v1.20 | - | v1.22 |

| external-provisioner v3.0.0 | release-3.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v3.0.0 | v1.20 | - | v1.22 |

| external-provisioner v2.2.2 | release-2.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v2.2.2 | v1.17 | - | v1.21 |

| external-provisioner v2.1.2 | release-2.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v2.1.2 | v1.17 | - | v1.19 |

| external-provisioner v2.0.5 | release-2.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v2.0.5 | v1.17 | - | v1.19 |

| external-provisioner v1.6.1 | release-1.6 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-provisioner:v1.6.1 | v1.13 | v1.21 | v1.18 |

| external-provisioner v1.5.0 | release-1.5 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.5.0 | v1.13 | v1.21 | v1.17 |

| external-provisioner v1.4.0 | release-1.4 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.4.0 | v1.13 | v1.21 | v1.16 |

| external-provisioner v1.3.1 | release-1.3 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.3.1 | v1.13 | v1.19 | v1.15 |

| external-provisioner v1.2.0 | release-1.2 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.2.0 | v1.13 | v1.19 | v1.14 |

| external-provisioner v0.4.2 | release-0.4 | v0.3.0 | v0.3.0 | quay.io/k8scsi/csi-provisioner:v0.4.2 | v1.10 | v1.16 | v1.10 |

Description

The CSI external-provisioner is a sidecar container that watches the Kubernetes API server for PersistentVolumeClaim objects.

It calls CreateVolume against the specified CSI endpoint to provision a new volume.

Volume provisioning is triggered by the creation of a new Kubernetes PersistentVolumeClaim object, if the PVC references a Kubernetes StorageClass, and the name in the provisioner field of the storage class matches the name returned by the specified CSI endpoint in the GetPluginInfo call.

Once a new volume is successfully provisioned, the sidecar container creates a Kubernetes PersistentVolume object to represent the volume.

The deletion of a PersistentVolumeClaim object bound to a PersistentVolume corresponding to this driver with a delete reclaim policy causes the sidecar container to trigger a DeleteVolume operation against the specified CSI endpoint to delete the volume. Once the volume is successfully deleted, the sidecar container also deletes the PersistentVolume object representing the volume.

DataSources

The external-provisioner provides the ability to request a volume be pre-populated from a data source during provisioning. For more information on how data sources are handled see DataSources.

Snapshot

The CSI external-provisioner supports the Snapshot DataSource. If a Snapshot CRD is specified as a data source on a PVC object, the sidecar container fetches the information about the snapshot by fetching the SnapshotContent object and populates the data source field in the resulting CreateVolume call to indicate to the storage system that the new volume should be populated using the specified snapshot.

PersistentVolumeClaim (clone)

Cloning is also implemented by specifying a kind: of type PersistentVolumeClaim in the DataSource field of a Provision request. It's the responsbility of the external-provisioner to verify that the claim specified in the DataSource object exists, is in the same storage class as the volume being provisioned and that the claim is currently Bound.

StorageClass Parameters

When provisioning a new volume, the CSI external-provisioner sets the map<string, string> parameters field in the CSI CreateVolumeRequest call to the key/values specified in the StorageClass it is handling.

The CSI external-provisioner (v1.0.1+) also reserves the parameter keys prefixed with csi.storage.k8s.io/. Any StorageClass keys prefixed with csi.storage.k8s.io/ are not passed to the CSI driver as an opaque parameter.

The following reserved StorageClass parameter keys trigger behavior in the CSI external-provisioner:

csi.storage.k8s.io/provisioner-secret-namecsi.storage.k8s.io/provisioner-secret-namespacecsi.storage.k8s.io/controller-publish-secret-namecsi.storage.k8s.io/controller-publish-secret-namespacecsi.storage.k8s.io/node-stage-secret-namecsi.storage.k8s.io/node-stage-secret-namespacecsi.storage.k8s.io/node-publish-secret-namecsi.storage.k8s.io/node-publish-secret-namespacecsi.storage.k8s.io/fstype

If the PVC VolumeMode is set to Filesystem, and the value of csi.storage.k8s.io/fstype is specified, it is used to populate the FsType in CreateVolumeRequest.VolumeCapabilities[x].AccessType and the AccessType is set to Mount.

For more information on how secrets are handled see Secrets & Credentials.

Example StorageClass:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gold-example-storage

provisioner: exampledriver.example.com

parameters:

disk-type: ssd

csi.storage.k8s.io/fstype: ext4

csi.storage.k8s.io/provisioner-secret-name: mysecret

csi.storage.k8s.io/provisioner-secret-namespace: mynamespace

PersistentVolumeClaim and PersistentVolume Parameters

The CSI external-provisioner (v1.6.0+) introduces the --extra-create-metadata flag, which automatically sets the following map<string, string> parameters in the CSI CreateVolumeRequest:

csi.storage.k8s.io/pvc/namecsi.storage.k8s.io/pvc/namespacecsi.storage.k8s.io/pv/name

These parameters are not part of the StorageClass, but are internally generated using the name and namespace of the source PersistentVolumeClaim and PersistentVolume.

Usage

CSI drivers that support dynamic volume provisioning should use this sidecar container, and advertise the CSI CREATE_DELETE_VOLUME controller capability.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-provisioner/blob/master/README.md.

Deployment

The CSI external-provisioner is deployed as a controller. See deployment section for more details.

CSI external-resizer

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-resizer

Status: Beta starting with v0.3.0

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-resizer v1.13.0 | release-1.9 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.13.0 | v1.16 | - | v1.32 |

| external-resizer v1.12.0 | release-1.8 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.12.0 | v1.16 | - | v1.21 |

| external-resizer v1.11.0 | release-1.7 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.7.0 | v1.16 | - | v1.29 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-resizer v1.9.0 | release-1.9 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.9.0 | v1.16 | - | v1.28 |

| external-resizer v1.8.0 | release-1.8 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.8.0 | v1.16 | - | v1.23 |

| external-resizer v1.7.0 | release-1.7 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.7.0 | v1.16 | - | v1.23 |

| external-resizer v1.6.0 | release-1.6 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.6.0 | v1.16 | - | v1.23 |

| external-resizer v1.5.0 | release-1.5 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.5.0 | v1.16 | - | v1.23 |

| external-resizer v1.4.0 | release-1.4 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.4.0 | v1.16 | - | v1.23 |

| external-resizer v1.3.0 | release-1.3 | v1.5.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.3.0 | v1.16 | - | v1.22 |

| external-resizer v1.2.0 | release-1.2 | v1.2.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.2.0 | v1.16 | - | v1.21 |

| external-resizer v1.1.0 | release-1.1 | v1.2.0 | - | registry.k8s.io/sig-storage/csi-resizer:v1.1.0 | v1.16 | - | v1.16 |

| external-resizer v0.5.0 | release-0.5 | v1.2.0 | - | quay.io/k8scsi/csi-resizer:v0.5.0 | v1.15 | - | v1.16 |

| external-resizer v0.2.0 | release-0.2 | v1.1.0 | - | quay.io/k8scsi/csi-resizer:v0.2.0 | v1.15 | - | v1.15 |

| external-resizer v0.1.0 | release-0.1 | v1.1.0 | - | quay.io/k8scsi/csi-resizer:v0.1.0 | v1.14 | v1.14 | v1.14 |

| external-resizer v1.0.1 | release-1.0 | v1.2.0 | - | quay.io/k8scsi/csi-resizer:v1.0.1 | v1.16 | - | v1.16 |

Description

The CSI external-resizer is a sidecar container that watches the Kubernetes API server for PersistentVolumeClaim object edits and

triggers ControllerExpandVolume operations against a CSI endpoint if user requested more storage on PersistentVolumeClaim object.

Usage

CSI drivers that support Kubernetes volume expansion should use this sidecar container, and advertise the CSI VolumeExpansion plugin capability.

Deployment

The CSI external-resizer is deployed as a controller. See deployment section for more details.

CSI external-snapshotter

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-snapshotter

Status: GA v4.0.0+

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v8.2.0 | release-8.2 | v1.11.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v8.2.0 registry.k8s.io/sig-storage/csi-snapshotter:v8.2.0 | v1.25 | - | v1.25 |

| external-snapshotter v8.1.0 | release-8.1 | v1.11.0 | - | registry.k8s.io/sig-storage/snapshot-controller:v8.1.0 registry.k8s.io/sig-storage/csi-snapshotter:v8.1.0 registry.k8s.io/sig-storage/snapshot-validation-webhook:v8.1.0 | v1.25 | - | v1.25 |

| external-snapshotter v8.0.2 | release-8.0 | v1.9.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v8.0.2 registry.k8s.io/sig-storage/snapshot-validation-webhook:v8.0.2 | v1.25 | - | v1.25 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-snapshotter v6.3.0 | release-6.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v6.2.1 | v1.20 | - | v1.24 |

| external-snapshotter v6.2.2 | [release-6.2](https://github.c | ||||||

| external-snapshotter v6.1.0 | release-6.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v6.1.0 | v1.20 | - | v1.24 |

| external-snapshotter v6.0.1 | release-6.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v6.0.1 | v1.20 | - | v1.24 |

| external-snapshotter v5.0.1 | release-5.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v5.0.1 | v1.20 | - | v1.22 |

| external-snapshotter v4.2.1 | release-4.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v4.2.1 | v1.20 | - | v1.22 |

| external-snapshotter v4.1.1 | release-4.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v4.1.1 | v1.20 | - | v1.20 |

| external-snapshotter v4.0.1 | release-4.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v4.0.1 | v1.20 | - | v1.20 |

| external-snapshotter v3.0.3 (beta) | release-3.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v3.0.3 | v1.17 | - | v1.17 |

| external-snapshotter v2.1.4 (beta) | release-2.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-snapshotter:v2.1.4 | v1.17 | - | v1.17 |

| external-snapshotter v1.2.2 (alpha) | release-1.2 | v1.0.0 | - | /registry.k8s.io/sig-storage/csi-snapshotter:v1.2.2 | v1.13 | v1.16 | v1.14 |

| external-snapshotter v0.4.2 (alpha) | release-0.4 | v0.3.0 | v0.3.0 | quay.io/k8scsi/csi-snapshotter:v0.4.2 | v1.12 | v1.16 | v1.12 |

To use the snapshot beta and GA feature, a snapshot controller is also required. For more information, see this snapshot-controller page.

Snapshot Beta/GA

Description

The CSI external-snapshotter sidecar watches the Kubernetes API server for VolumeSnapshotContent CRD objects. The CSI external-snapshotter sidecar is also responsible for calling the CSI RPCs CreateSnapshot, DeleteSnapshot, and ListSnapshots.

Volume Group Snapshot support can be enabled with the --enable-volume-group-snapshots option. When enabled, the CSI external-snapshotter sidecar watches the API server for VolumeGroupSnapshotContent CRD object, and will be responsible for calling the CSI RPCs CreateVolumeGroupSnapshot, DeleteVolumeGroupSnapshot and GetVolumeGroupSnapshot.

VolumeSnapshotClass and VolumeGroupSnapshotClass Parameters

When provisioning a new volume snapshot, the CSI external-snapshotter sets the map<string, string> parameters field in the CSI CreateSnapshotRequest call to the key/values specified in the VolumeSnapshotClass it is handling.

When volume group snapshot support is enabled, the map<string, string> parameters field is set in the CSI CreateVolumeGroupSnapshotRequest call to the key/values specified in the VolumeGroupSnapshotClass it is handling.

The CSI external-snapshotter also reserves the parameter keys prefixed with csi.storage.k8s.io/. Any VolumeSnapshotClass or VolumeGroupSnapshotClass keys prefixed with csi.storage.k8s.io/ are not passed to the CSI driver as an opaque parameter.

The following reserved VolumeSnapshotClass parameter keys trigger behavior in the CSI external-snapshotter:

csi.storage.k8s.io/snapshotter-secret-name(v1.0.1+)csi.storage.k8s.io/snapshotter-secret-namespace(v1.0.1+)csi.storage.k8s.io/snapshotter-list-secret-name(v2.1.0+)csi.storage.k8s.io/snapshotter-list-secret-namespace(v2.1.0+)

For more information on how secrets are handled see Secrets & Credentials.

VolumeSnapshot, VolumeSnapshotContent, VolumeGroupSnapshot and VolumeGroupSnapshotContent Parameters

The CSI external-snapshotter (v4.0.0+) introduces the --extra-create-metadata flag, which automatically sets the following map<string, string> parameters in the CSI CreateSnapshotRequest and CreateVolumeGroupSnapshotRequest:

csi.storage.k8s.io/volumesnapshot/namecsi.storage.k8s.io/volumesnapshot/namespacecsi.storage.k8s.io/volumesnapshotcontent/name

These parameters are internally generated using the name and namespace of the source VolumeSnapshot and VolumeSnapshotContent.

For detailed snapshot beta design changes, see the design doc here.

For detailed information about volume snapshot and restore functionality, see Volume Snapshot & Restore.

Usage

CSI drivers that support provisioning volume snapshots and the ability to provision new volumes using those snapshots should use this sidecar container, and advertise the CSI CREATE_DELETE_SNAPSHOT controller capability.

CSI drivers that support provisioning volume group snapshots should use this side container too, and advertise the CSI CREATE_DELETE_GET_VOLUME_GROUP_SNAPSHOT controller capability.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-snapshotter/blob/release-6.2/README.md.

Deployment

The CSI external-snapshotter is deployed as a sidecar controller. See deployment section for more details.

For an example deployment, see this example which deploys external-snapshotter and external-provisioner with the Hostpath CSI driver.

Snapshot Alpha

Description

The CSI external-snapshotter is a sidecar container that watches the Kubernetes API server for VolumeSnapshot and VolumeSnapshotContent CRD objects.

The creation of a new VolumeSnapshot object referencing a SnapshotClass CRD object corresponding to this driver causes the sidecar container to trigger a CreateSnapshot operation against the specified CSI endpoint to provision a new snapshot. When a new snapshot is successfully provisioned, the sidecar container creates a Kubernetes VolumeSnapshotContent object to represent the new snapshot.

The deletion of a VolumeSnapshot object bound to a VolumeSnapshotContent corresponding to this driver with a delete deletion policy causes the sidecar container to trigger a DeleteSnapshot operation against the specified CSI endpoint to delete the snapshot. Once the snapshot is successfully deleted, the sidecar container also deletes the VolumeSnapshotContent object representing the snapshot.

Usage

CSI drivers that support provisioning volume snapshots and the ability to provision new volumes using those snapshots should use this sidecar container, and advertise the CSI CREATE_DELETE_SNAPSHOT controller capability.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-snapshotter/blob/release-1.2/README.md.

Deployment

The CSI external-snapshotter is deployed as a controller. See deployment section for more details.

For an example deployment, see this example which deploys external-snapshotter and external-provisioner with the Hostpath CSI driver.

CSI livenessprobe

Status and Releases

Git Repository: https://github.com/kubernetes-csi/livenessprobe

Status: GA/Stable

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version |

|---|---|---|---|---|---|---|

| livenessprobe v2.15.0 | release-2.15 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.15.0 | v1.13 | - |

| livenessprobe v2.14.0 | release-2.14 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.14.0 | v1.13 | - |

| livenessprobe v2.13.1 | release-2.13 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.13.1 | v1.13 | - |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version |

|---|---|---|---|---|---|---|

| livenessprobe v2.12.0 | release-2.12 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.12.0 | v1.13 | - |

| livenessprobe v2.11.0 | release-2.11 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.11.0 | v1.13 | - |

| livenessprobe v2.10.0 | release-2.10 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.10.0 | v1.13 | - |

| livenessprobe v2.9.0 | release-2.9 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.9.0 | v1.13 | - |

| livenessprobe v2.8.0 | release-2.8 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.8.0 | v1.13 | - |

| livenessprobe v2.7.0 | release-2.7 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.7.0 | v1.13 | - |

| livenessprobe v2.6.0 | release-2.6 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.6.0 | v1.13 | - |

| livenessprobe v2.5.0 | release-2.5 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.5.0 | v1.13 | - |

| livenessprobe v2.4.0 | release-2.4 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.4.0 | v1.13 | - |

| livenessprobe v2.3.0 | release-2.3 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.3.0 | v1.13 | - |

| livenessprobe v2.2.0 | release-2.2 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.2.0 | v1.13 | - |

| livenessprobe v2.1.0 | release-2.1 | v1.0.0 | - | registry.k8s.io/sig-storage/livenessprobe:v2.1.0 | v1.13 | - |

| livenessprobe v2.0.0 | release-2.0 | v1.0.0 | - | quay.io/k8scsi/livenessprobe:v2.0.0 | v1.13 | - |

| livenessprobe v1.1.0 | release-1.1 | v1.0.0 | - | quay.io/k8scsi/livenessprobe:v1.1.0 | v1.13 | - |

| Unsupported. | No 0.x branch. | v0.3.0 | v0.3.0 | quay.io/k8scsi/livenessprobe:v0.4.1 | v1.10 | v1.16 |

Description

The CSI livenessprobe is a sidecar container that monitors the health of the CSI driver and reports it to Kubernetes via the Liveness Probe mechanism. This enables Kubernetes to automatically detect issues with the driver and restart the pod to try and fix the issue.

Usage

All CSI drivers should use the liveness probe to improve the availability of the driver while deployed on Kubernetes.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/livenessprobe/blob/master/README.md.

Deployment

The CSI livenessprobe is deployed as part of controller and node deployments. See deployment section for more details.

CSI node-driver-registrar

Status and Releases

Git Repository: https://github.com/kubernetes-csi/node-driver-registrar

Status: GA/Stable

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| node-driver-registrar v2.13.0 | release-2.13 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.13.0 | v1.13 | - | 1.25 |

| node-driver-registrar v2.12.0 | release-2.12 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.12.0 | v1.13 | - | 1.25 |

| node-driver-registrar v2.11.1 | release-2.7 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.11.1 | v1.13 | - | 1.25 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| node-driver-registrar v2.9.0 | release-2.8 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.9.0 | v1.13 | - | 1.25 |

| node-driver-registrar v2.8.0 | release-2.8 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.8.0 | v1.13 | - | |

| node-driver-registrar v2.7.0 | release-2.7 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.7.0 | v1.13 | - | |

| node-driver-registrar v2.6.3 | release-2.6 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.6.3 | v1.13 | - | |

| node-driver-registrar v2.5.1 | release-2.5 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.5.1 | v1.13 | - | |

| node-driver-registrar v2.4.0 | release-2.4 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.4.0 | v1.13 | - | |

| node-driver-registrar v2.3.0 | release-2.3 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.3.0 | v1.13 | - | |

| node-driver-registrar v2.2.0 | release-2.2 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.2.0 | v1.13 | - | |

| node-driver-registrar v2.1.0 | release-2.1 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.1.0 | v1.13 | - | |

| node-driver-registrar v2.0.0 | release-2.0 | v1.0.0 | - | registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.0.0 | v1.13 | - | |

| node-driver-registrar v1.2.0 | release-1.2 | v1.0.0 | - | quay.io/k8scsi/csi-node-driver-registrar:v1.2.0 | v1.13 | - | |

| driver-registrar v0.4.2 | release-0.4 | v0.3.0 | v0.3.0 | quay.io/k8scsi/driver-registrar:v0.4.2 | v1.10 | v1.16 |

Description

The CSI node-driver-registrar is a sidecar container that fetches driver information (using NodeGetInfo) from a CSI endpoint and registers it with the kubelet on that node using the kubelet plugin registration mechanism.

Usage

Kubelet directly issues CSI NodeGetInfo, NodeStageVolume, and NodePublishVolume calls against CSI drivers. It uses the kubelet plugin registration mechanism to discover the unix domain socket to talk to the CSI driver. Therefore, all CSI drivers should use this sidecar container to register themselves with kubelet.

For detailed information (binary parameters, etc.), see the README of the relevant branch.

Deployment

The CSI node-driver-registrar is deployed per node. See deployment section for more details.

CSI cluster-driver-registrar

Deprecated

This sidecar container was not updated since Kubernetes 1.13. As of Kubernetes 1.16, this side car container is officially deprecated.

The purpose of this side car container was to automatically register a CSIDriver object containing information about the driver with Kubernetes. Without this side car, developers and CSI driver vendors will now have to add a CSIDriver object in their installation manifest or any tool that installs their CSI driver.

Please see CSIDriver for more information.

Status and Releases

Git Repository: https://github.com/kubernetes-csi/cluster-driver-registrar

Status: Alpha

| Latest stable release | Branch | Compatible with CSI Version | Container Image | Min k8s Version | Max k8s version |

|---|---|---|---|---|---|

| cluster-driver-registrar v1.0.1 | release-1.0 | v1.0.0 | quay.io/k8scsi/csi-cluster-driver-registrar:v1.0.1 | v1.13 | - |

| driver-registrar v0.4.2 | release-0.4 | v0.3.0 | quay.io/k8scsi/driver-registrar:v0.4.2 | v1.10 | - |

Description

The CSI cluster-driver-registrar is a sidecar container that registers a CSI Driver with a Kubernetes cluster by creating a CSIDriver Object which enables the driver to customize how Kubernetes interacts with it.

Usage

CSI drivers that use one of the following Kubernetes features should use this sidecar container:

- Skip Attach

- For drivers that don't support

ControllerPublishVolume, this indicates to Kubernetes to skip the attach operation and eliminates the need to deploy theexternal-attachersidecar.

- For drivers that don't support

- Pod Info on Mount

- This causes Kubernetes to pass metadata such as Pod name and namespace to the

NodePublishVolumecall.

- This causes Kubernetes to pass metadata such as Pod name and namespace to the

If you are not using one of these features, this sidecar container (and the creation of the CSIDriver Object) is not required. However, it is still recommended, because the CSIDriver Object makes it easier for users to easily discover the CSI drivers installed on their clusters.

For detailed information (binary parameters, etc.), see the README of the relevant branch.

Deployment

The CSI cluster-driver-registrar is deployed as a controller. See deployment section for more details.

CSI external-health-monitor-controller

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-health-monitor

Status: Alpha

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image |

|---|---|---|---|---|

| external-health-monitor-controller v0.14.0 | release-0.14 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.10.0 |

| external-health-monitor-controller v0.13.0 | release-0.13 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.9.0 |

| external-health-monitor-controller v0.12.1 | release-0.12 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.8.0 |

Unsupported Versions

List of previous versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image |

|---|---|---|---|---|

| external-health-monitor-controller v0.10.0 | release-0.8 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.10.0 |

| external-health-monitor-controller v0.9.0 | release-0.8 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.9.0 |

| external-health-monitor-controller v0.8.0 | release-0.8 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.8.0 |

| external-health-monitor-controller v0.7.0 | release-0.7 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.7.0 |

| external-health-monitor-controller v0.6.0 | release-0.6 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.6.0 |

| external-health-monitor-controller v0.4.0 | release-0.4 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.4.0 |

| external-health-monitor-controller v0.3.0 | release-0.3 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.3.0 |

| external-health-monitor-controller v0.2.0 | release-0.2 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.2.0 |

Description

The CSI external-health-monitor-controller is a sidecar container that is deployed together with the CSI controller driver, similar to how the CSI external-provisioner sidecar is deployed. It calls the CSI controller RPC ListVolumes or ControllerGetVolume to check the health condition of the CSI volumes and report events on PersistentVolumeClaim if the condition of a volume is abnormal.

The CSI external-health-monitor-controller also watches for node failure events. This component can be enabled by setting the enable-node-watcher flag to true. This will only have effects on local PVs now. When a node failure event is detected, an event will be reported on the PVC to indicate that pods using this PVC are on a failed node.

Usage

CSI drivers that support VOLUME_CONDITION and LIST_VOLUMES or VOLUME_CONDITION and GET_VOLUME controller capabilities should use this sidecar container.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-health-monitor/blob/master/README.md.

Deployment

The CSI external-health-monitor-controller is deployed as a controller. See https://github.com/kubernetes-csi/external-health-monitor/blob/master/README.md for more details.

CSI external-health-monitor-agent

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-health-monitor

Status: Deprecated

Unsupported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image |

|---|---|---|---|---|

| external-health-monitor-agent v0.2.0 | release-0.2 | v1.3.0 | - | registry.k8s.io/sig-storage/csi-external-health-monitor-agent:v0.2.0 |

Description

Note: This sidecar has been deprecated and replaced with the CSIVolumeHealth feature in Kubernetes.

The CSI external-health-monitor-agent is a sidecar container that is deployed together with the CSI node driver, similar to how the CSI node-driver-registrar sidecar is deployed. It calls the CSI node RPC NodeGetVolumeStats to check the health condition of the CSI volumes and report events on Pod if the condition of a volume is abnormal.

Usage

CSI drivers that support VOLUME_CONDITION and NODE_GET_VOLUME_STATS node capabilities should use this sidecar container.

For detailed information (binary parameters, RBAC rules, etc.), see https://github.com/kubernetes-csi/external-health-monitor/blob/master/README.md.

Deployment

The CSI external-health-monitor-agent is deployed as a DaemonSet. See https://github.com/kubernetes-csi/external-health-monitor/blob/master/README.md for more details.

CSI external-snapshot-metadata

Status and Releases

Git Repository: https://github.com/kubernetes-csi/external-snapshot-metadata

Supported Versions

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| v0.1.0 | v0.1.0 | v1.10.0 | - | registry.k8s.io/sig-storage/csi-snapshot-metadata:v0.1.0 | v1.33 | - | v1.33 |

Alpha

Description

This sidecar securely serves snapshot metadata to Kubernetes clients through the Kubernetes SnapshotMetadata Service API. This API is similar to the CSI SnapshotMetadata Service but is designed to be used by Kuberetes authenticated and authorized backup applications. Its protobuf specification is available in the sidecar repository.

The sidecar authenticates and authorizes each Kubernetes backup application request made through the Kubernetes SnapshotMetadata Service API. It then acts as a proxy as it fetches the desired metadata from the CSI driver and streams it directly to the requesting application with no load on the Kubernetes API server.

See "The External Snapshot Metadata Sidecar" section in the CSI Changed Block Tracking KEP for additional details on the sidecar.

Usage

Backup applications, identified by their authorized ServiceAccount objects, directly communicate with the sidecar using the Kubernetes SnapshotMetadata Service API. The authorization needed is described in the "Risks and Mitigations" section of the CSI Changed Block Tracking KEP. In particular, this requires the ability to use the Kubernetes TokenRequest API and to access the objects required to use the API.

The availability of this optional feature is advertised to backup applications by the presence of a Snapshot Metadata Service CR that is named for the CSI driver that provisioned the PersistentVolume and VolumeSnapshot objects involved. The CR contains a gRPC service endpoint and CA certificate, and an audience string for authentication. A backup application must use the Kubernetes TokenRequest API with the audience string to obtain a Kubernetes authentication token for use in the Kubernetes SnapshotMetadata Service API call. The backup application should establish trust for the CA certificate before making the gRPC call to the service endpoint.

The Kubernetes SnapshotMetadata Service API uses a gRPC stream to return VolumeSnapshot metadata to the backup application. Metadata can be lengthy, so the API supports restarting an interrupted metadata request from an intermediate point in case of failure. The Resources section below describes the programming artifacts available to support backup application use of this API.

Deployment

The CSI external-snapshot-metadata sidecar should be deployed by

CSI drivers that support the

Changed Block Tracking feature.

The sidecar must be deployed in the same pod as the CSI driver and

will communicate with its gRPC CSI SnapshotMetadata Service

and CSI Identity Service

over a UNIX domain socket.

The sidecar should be configured to run under the authority of its CSI driver ServiceAccount, which must be authorized as described in the "Risks and Mitigations" section of the CSI Changed Block Tracking KEP. In particular, this requires the ability to use the Kubernetes TokenReview and SubjectAccessReview APIs.

A Service object must be created to expose the endpoints of the Kubernetes SnapshotMetadata Service API gRPC server implemented by the sidecar.

A SnapshotMetadataService CR, named for the CSI driver, must be created to advertise the availability of this optional feature to Kubernetes backup application clients. The CR contains the CA certificate and Service endpoint address of the sidecar and the audience string needed for the client authentication token.

Resources

The external-snapshot-metadata repository contains the protobuf specification of the Kubernetes SnapshotMetadata Service API.

In addition, the repository has a number of useful artifacts to support Go language programs:

-

The Go client interface for the Kubernetes SnapshotMetadata Service API.

-

The pkg/iterator client helper package. This may be used by backup applications instead of working directly with the Kubernetes SnapshotMetadata Service gRPC client interfaces. The snapshot-metadata-lister example Go command illustrates the use of this helper package.

-

Go language CSI SnapshotMetadataService API client mocks.

The sample Hostpath CSI driver has been extended to support the Changed Block Tracking feature and provides an illustration on how to deploy a CSI driver.

CSI objects

The Kubernetes API contains the following CSI specific objects:

The schema definition for the objects can be found in the Kubernetes API reference

CSIDriver Object

Status

- Kubernetes 1.12 - 1.13: Alpha

- Kubernetes 1.14: Beta

- Kubernetes 1.18: GA

What is the CSIDriver object?

The CSIDriver Kubernetes API object serves two purposes:

- Simplify driver discovery

- If a CSI driver creates a

CSIDriverobject, Kubernetes users can easily discover the CSI Drivers installed on their cluster (simply by issuingkubectl get CSIDriver)

- Customizing Kubernetes behavior

- Kubernetes has a default set of behaviors when dealing with CSI Drivers (for example, it calls the

Attach/Detachoperations by default). This object allows CSI drivers to specify how Kubernetes should interact with it.

What fields does the CSIDriver object have?

Here is an example of a v1 CSIDriver object:

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: mycsidriver.example.com

spec:

attachRequired: true

podInfoOnMount: true

fsGroupPolicy: File # added in Kubernetes 1.19, this field is GA as of Kubernetes 1.23

volumeLifecycleModes: # added in Kubernetes 1.16, this field is beta

- Persistent

- Ephemeral

tokenRequests: # added in Kubernetes 1.20. See status at https://kubernetes-csi.github.io/docs/token-requests.html#status

- audience: "gcp"

- audience: "" # empty string means defaulting to the `--api-audiences` of kube-apiserver

expirationSeconds: 3600

requiresRepublish: true # added in Kubernetes 1.20. See status at https://kubernetes-csi.github.io/docs/token-requests.html#status

seLinuxMount: true # Added in Kubernetest 1.25.

These are the important fields:

name- This should correspond to the full name of the CSI driver.

attachRequired- Indicates this CSI volume driver requires an attach operation (because it implements the CSI

ControllerPublishVolumemethod), and that Kubernetes should call attach and wait for any attach operation to complete before proceeding to mounting. - If a

CSIDriverobject does not exist for a given CSI Driver, the default istrue-- meaning attach will be called. - If a

CSIDriverobject exists for a given CSI Driver, but this field is not specified, it also defaults totrue-- meaning attach will be called. - For more information see Skip Attach.

- Indicates this CSI volume driver requires an attach operation (because it implements the CSI

podInfoOnMount- Indicates this CSI volume driver requires additional pod information (like pod name, pod UID, etc.) during mount operations.

- If value is not specified or

false, pod information will not be passed on mount. - If value is set to

true, Kubelet will pass pod information asvolume_contextin CSINodePublishVolumecalls:"csi.storage.k8s.io/pod.name": pod.Name"csi.storage.k8s.io/pod.namespace": pod.Namespace"csi.storage.k8s.io/pod.uid": string(pod.UID)"csi.storage.k8s.io/serviceAccount.name": pod.Spec.ServiceAccountName

- For more information see Pod Info on Mount.

fsGroupPolicy- This field was added in Kubernetes 1.19 and cannot be set when using an older Kubernetes release.

- This field is beta in Kubernetes 1.20 and GA in Kubernetes 1.23.

- Controls if this CSI volume driver supports volume ownership and permission changes when volumes are mounted.

- The following modes are supported, and if not specified the default is

ReadWriteOnceWithFSType:None: Indicates that volumes will be mounted with no modifications, as the CSI volume driver does not support these operations.File: Indicates that the CSI volume driver supports volume ownership and permission change via fsGroup, and Kubernetes may use fsGroup to change permissions and ownership of the volume to match user requested fsGroup in the pod's SecurityPolicy regardless of fstype or access mode.ReadWriteOnceWithFSType: Indicates that volumes will be examined to determine if volume ownership and permissions should be modified to match the pod's security policy. Changes will only occur if thefsTypeis defined and the persistent volume'saccessModescontainsReadWriteOnce. This is the default behavior if no other FSGroupPolicy is defined.

- For more information see CSI Driver fsGroup Support.

volumeLifecycleModes- This field was added in Kubernetes 1.16 and cannot be set when using an older Kubernetes release.

- This field is beta.

- It informs Kubernetes about the volume modes that are supported by the driver.

This ensures that the driver is not used incorrectly by users.

The default is

Persistent, which is the normal PVC/PV mechanism.Ephemeralenables inline ephemeral volumes in addition (when both are listed) or instead of normal volumes (when it is the only entry in the list).

tokenRequests- This field was added in Kubernetes 1.20 and cannot be set when using an older Kubernetes release.

- This field is enabled by default in Kubernetes 1.21 and cannot be disabled since 1.22.

- If this field is specified, Kubelet will plumb down the bound service account tokens of the pod as

volume_contextin theNodePublishVolume:"csi.storage.k8s.io/serviceAccount.tokens": {"gcp":{"token":"<token>","expirationTimestamp":"<expiration timestamp in RFC3339>"}}- If CSI driver doesn't find token recorded in the

volume_context, it should return error inNodePublishVolumeto inform Kubelet to retry. - Audiences should be distinct, otherwise the validation will fail. If the audience is "", it means the issued token has the same audience as kube-apiserver.

requiresRepublish- This field was added in Kubernetes 1.20 and cannot be set when using an older Kubernetes release.

- This field is enabled by default in Kubernetes 1.21 and cannot be disabled since 1.22.

- If this field is

true, Kubelet will periodically callNodePublishVolume. This is useful in the following scenarios:- If the volume mounted by CSI driver is short-lived.

- If CSI driver requires valid service account tokens (enabled by the field

tokenRequests) repeatedly.

- CSI drivers should only atomically update the contents of the volume. Mount point change will not be seen by a running container.

seLinuxMount- This field is alpha in Kubernetes 1.25. It must be explicitly enabled by setting feature gates

ReadWriteOncePodandSELinuxMountReadWriteOncePod. - The default value of this field is

false. - When set to

true, corresponding CSI driver announces that all its volumes are independent volumes from Linux kernel point of view and each of them can be mounted with a different SELinux label mount option (-o context=<SELinux label>). Examples:- A CSI driver that creates block devices formatted with a filesystem, such as

xfsorext4, can setseLinuxMount: true, because each volume has its own block device. - A CSI driver whose volumes are always separate exports on a NFS server can set

seLinuxMount: true, because each volume has its own NFS export and thus Linux kernel treats them as independent volumes. - A CSI driver that can provide two volumes as subdirectories of a common NFS export must set

seLinuxMount: false, because these two volumes are treated as a single volume by Linux kernel and must share the same-o context=<SELinux label>option.

- A CSI driver that creates block devices formatted with a filesystem, such as

- See corresponding KEP for details.

- Always test Pods with various SELinux contexts with various volume configurations before setting this field to

true!